D.1.2 compression performance – Comtech EF Data CDM-840 User Manual

Page 208

CDM-840 Remote Router

Revision 2

Appendix D

MN-CDM840

D–2

widely available as an open-source software tool; it does not require the use of a software

license.

Deflate-compressed blocks are wrapped with a header and footer to become GZIP files.

Typically, when a classical, single, general purpose CPU performs GZIP compression, either the

compression performance is scaled back to maximize data throughput speeds, or the CPU runs

slow. To negate either deficiency, an efficient solution is to offload the compression task to a

hardware-based GZIP function, as is accomplished with the CDM-840. Hardware-based GZIP

compression offloads lossless data compression and frees up the system’s main CPUs. This

allows the compression functions to operate not only independently, but also at much higher

data rates if needed. The ASIC takes in uncompressed input data, compresses it, and outputs the

data in compressed form. The compression hardware does many tasks in parallel, only offloaded

from the central CPUs of the CDM-840. This effectively eliminates the multi-pass and iterative

nature typical of a classical, single, general purpose CPU that is over tasked with executing the

Deflate algorithm.

D.1.2 C ompres s ion P erformance

Compression performance is classically measured by two metrics – size reduction and data

throughput:

• Size reduction is usually reported as a ratio of the uncompressed original size divided by

the compressed size.

• Data throughput is measured in bytes per second (bps) as measured on the

uncompressed side of the GZIP ASIC.

Data complexity has no effect on data throughput. Easy-to-compress data files that compress

with a high ratio pass through the co-processor at the same high data rate as very complex data,

which achieves lower compression ratios.

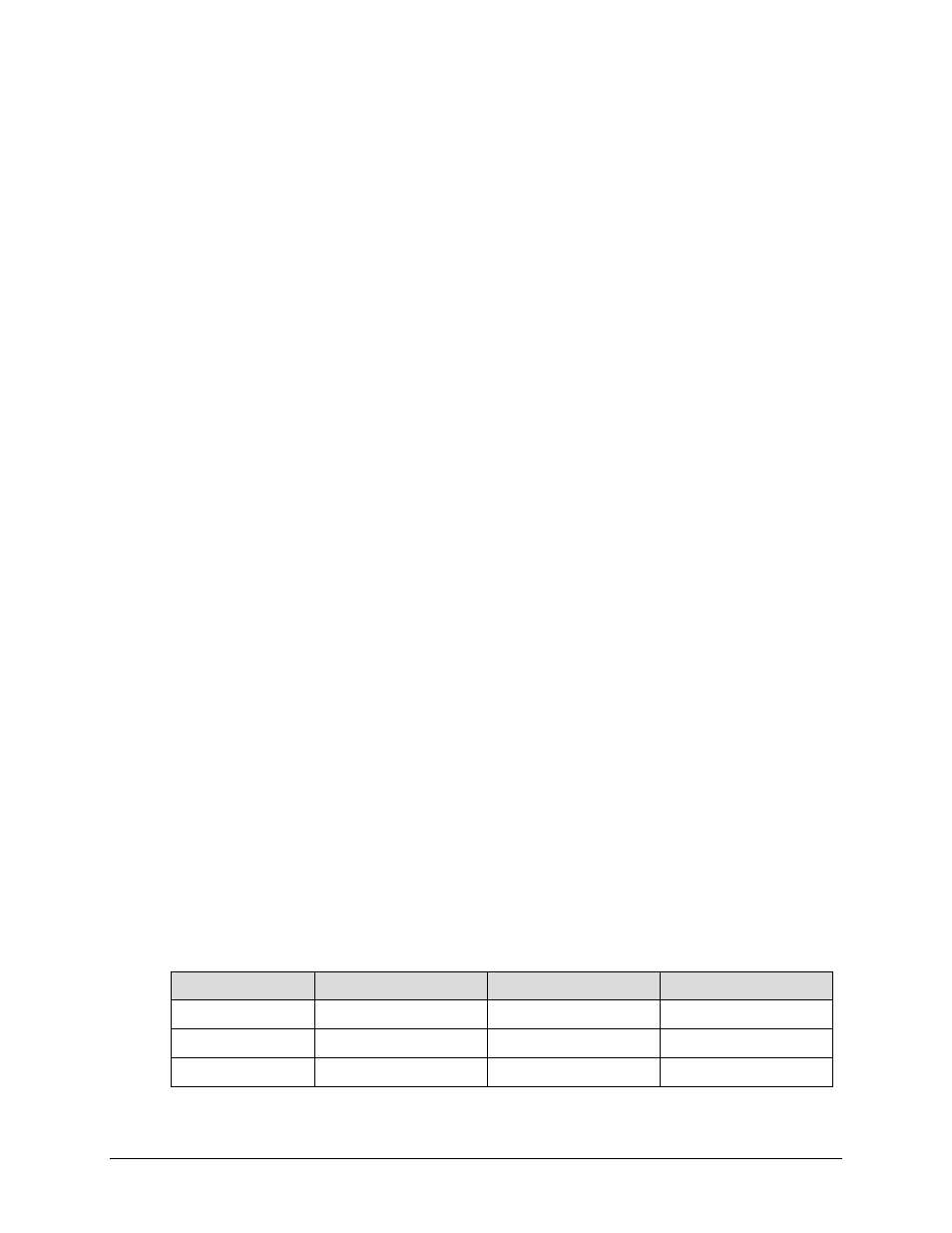

Table D-1 provides Comtech AHA GZIP compression ratio results as compared with the Calgary

Corpus and Canterbury Corpus industry standard file sets and algorithms. The HTML file set is

from a collection of Internet dynamic content; LZS (Lempel-Ziv-Stac) compression results are

based on publicly available descriptions of the LZS algorithm.

Table D-2 outlines the comparison the effects of the CTOG specification for current operation,

based on a session-based compression for which the current performance specifications are

given.

Table D-1. Comtech AHA GZip Performance Comparisons

File Sets

Comtech AHA363-PCIe

LZS

ALDC

Calgary Corpus

2.7:1

2.2:1

2.1:1

Canterbury Corpus

3.6:1

2.7:1

2.7:1

HTML

4.4:1

3.4:1

2.65:1